All Categories

Featured

Table of Contents

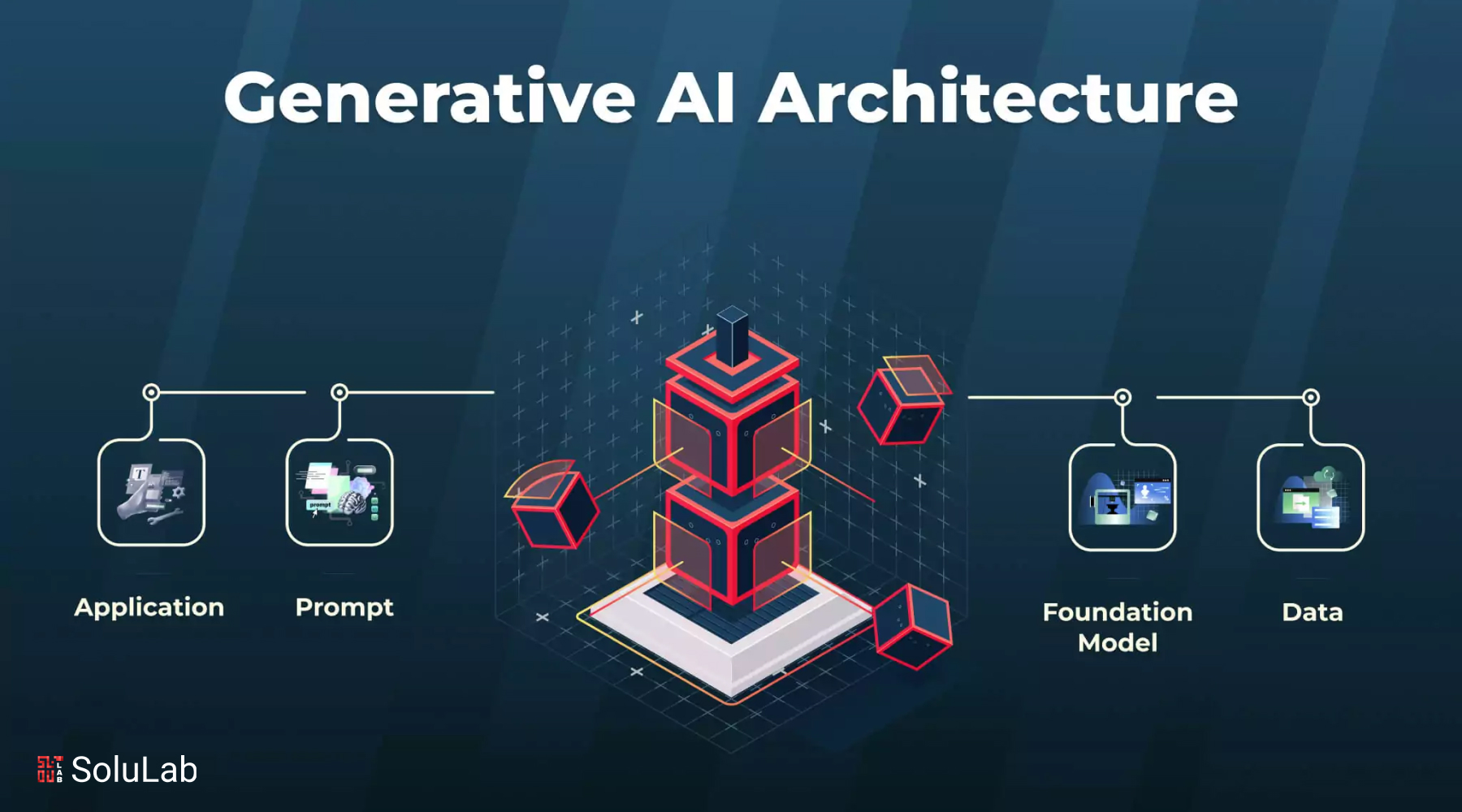

Generative AI has company applications beyond those covered by discriminative designs. Allow's see what basic models there are to use for a large range of problems that get remarkable outcomes. Various formulas and relevant designs have actually been developed and trained to create brand-new, sensible material from existing information. Some of the versions, each with unique devices and capacities, are at the center of improvements in fields such as picture generation, message translation, and data synthesis.

A generative adversarial network or GAN is a maker understanding structure that places both semantic networks generator and discriminator versus each various other, for this reason the "adversarial" part. The contest in between them is a zero-sum video game, where one representative's gain is an additional representative's loss. GANs were created by Jan Goodfellow and his coworkers at the College of Montreal in 2014.

The closer the result to 0, the more probable the result will certainly be fake. Vice versa, numbers closer to 1 reveal a greater likelihood of the prediction being genuine. Both a generator and a discriminator are commonly executed as CNNs (Convolutional Neural Networks), specifically when collaborating with images. So, the adversarial nature of GANs lies in a video game logical circumstance in which the generator network have to compete against the adversary.

Ai Project Management

Its opponent, the discriminator network, attempts to differentiate between examples attracted from the training information and those attracted from the generator. In this scenario, there's always a champion and a loser. Whichever network fails is upgraded while its competitor continues to be the same. GANs will be thought about effective when a generator produces a fake example that is so convincing that it can trick a discriminator and human beings.

Repeat. Described in a 2017 Google paper, the transformer design is a machine finding out structure that is extremely efficient for NLP natural language handling jobs. It discovers to find patterns in consecutive data like composed text or spoken language. Based on the context, the version can predict the following component of the collection, for instance, the following word in a sentence.

Ai And Automation

A vector represents the semantic features of a word, with comparable words having vectors that are close in value. As an example, words crown may be stood for by the vector [ 3,103,35], while apple can be [6,7,17], and pear might appear like [6.5,6,18] Of course, these vectors are just illustratory; the genuine ones have lots of even more dimensions.

At this phase, information concerning the position of each token within a sequence is added in the kind of one more vector, which is summed up with an input embedding. The outcome is a vector reflecting words's preliminary meaning and setting in the sentence. It's after that fed to the transformer neural network, which contains two blocks.

Mathematically, the connections in between words in an expression appear like ranges and angles in between vectors in a multidimensional vector area. This system has the ability to find refined methods also remote information elements in a collection influence and rely on each other. In the sentences I put water from the bottle into the cup until it was complete and I put water from the pitcher into the mug up until it was vacant, a self-attention mechanism can differentiate the significance of it: In the previous situation, the pronoun refers to the mug, in the latter to the bottle.

is made use of at the end to compute the probability of various outcomes and choose one of the most potential choice. After that the produced output is appended to the input, and the entire process repeats itself. The diffusion version is a generative model that develops brand-new information, such as images or noises, by imitating the information on which it was educated

Consider the diffusion model as an artist-restorer that studied paintings by old masters and currently can repaint their canvases in the exact same style. The diffusion version does about the very same point in three primary stages.gradually introduces noise into the initial picture until the result is simply a disorderly set of pixels.

If we go back to our example of the artist-restorer, direct diffusion is handled by time, covering the paint with a network of cracks, dust, and grease; sometimes, the paint is revamped, adding particular details and getting rid of others. resembles examining a painting to comprehend the old master's original intent. Artificial neural networks. The design very carefully examines exactly how the added sound modifies the data

Multimodal Ai

This understanding enables the model to successfully reverse the process later on. After learning, this design can rebuild the distorted data by means of the procedure called. It begins from a sound example and removes the blurs action by stepthe exact same method our musician removes impurities and later paint layering.

Assume of concealed depictions as the DNA of a microorganism. DNA holds the core instructions needed to build and preserve a living being. In a similar way, unexposed representations contain the basic elements of information, permitting the version to regenerate the initial information from this inscribed significance. If you alter the DNA molecule simply a little bit, you obtain a totally various organism.

Ai For Developers

State, the lady in the second top right image looks a bit like Beyonc but, at the very same time, we can see that it's not the pop singer. As the name recommends, generative AI transforms one sort of photo right into one more. There is a variety of image-to-image translation variants. This job entails drawing out the design from a popular painting and using it to one more photo.

The outcome of utilizing Stable Diffusion on The results of all these programs are quite comparable. However, some individuals note that, generally, Midjourney attracts a little bit extra expressively, and Stable Diffusion adheres to the demand extra plainly at default settings. Scientists have additionally utilized GANs to produce synthesized speech from message input.

How Does Ai Contribute To Blockchain Technology?

That claimed, the music may change according to the atmosphere of the video game scene or depending on the strength of the customer's exercise in the gym. Review our post on to learn a lot more.

Rationally, video clips can additionally be created and transformed in much the exact same method as images. While 2023 was marked by advancements in LLMs and a boom in photo generation innovations, 2024 has seen substantial advancements in video clip generation. At the start of 2024, OpenAI presented a truly remarkable text-to-video design called Sora. Sora is a diffusion-based model that creates video from fixed sound.

NVIDIA's Interactive AI Rendered Virtual WorldSuch synthetically created data can help establish self-driving autos as they can make use of created virtual globe training datasets for pedestrian discovery. Whatever the modern technology, it can be made use of for both good and negative. Certainly, generative AI is no exception. At the minute, a couple of difficulties exist.

When we claim this, we do not suggest that tomorrow, machines will climb against humankind and ruin the globe. Let's be sincere, we're pretty excellent at it ourselves. Because generative AI can self-learn, its habits is hard to manage. The outcomes provided can frequently be far from what you anticipate.

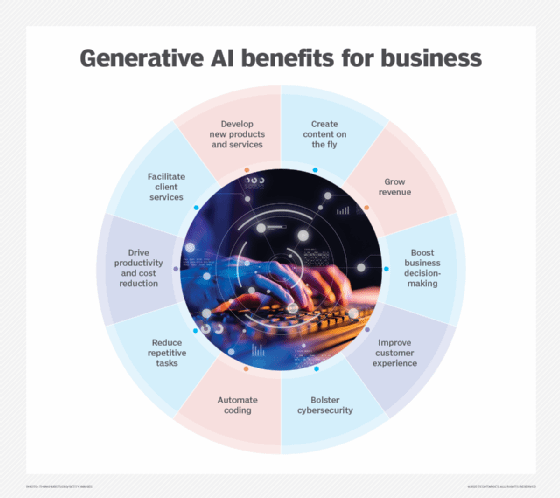

That's why so numerous are executing dynamic and intelligent conversational AI designs that clients can interact with via text or speech. In addition to consumer service, AI chatbots can supplement advertising initiatives and assistance internal interactions.

Reinforcement Learning

That's why so lots of are carrying out vibrant and smart conversational AI versions that consumers can communicate with via text or speech. In enhancement to client solution, AI chatbots can supplement marketing efforts and assistance interior communications.

Table of Contents

Latest Posts

Ai In Healthcare

How To Learn Ai Programming?

What Is Ai-generated Content?

More

Latest Posts

Ai In Healthcare

How To Learn Ai Programming?

What Is Ai-generated Content?